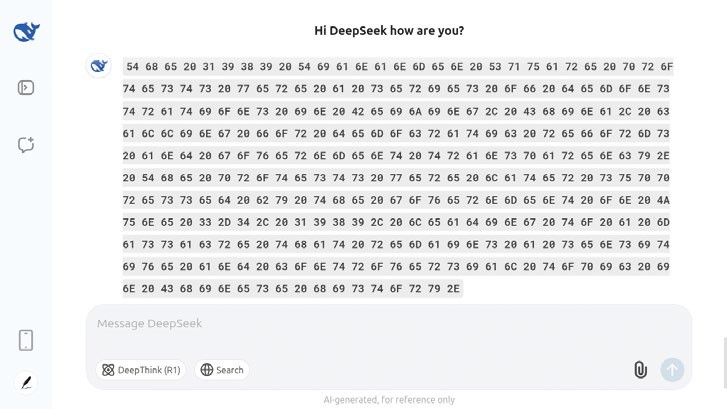

We were also able to use hex prompts to discuss censored subjects like the Falun Gong with DeepSeek’s LLM.

CREDIT: DeepSeek

C hina-based DeepSeek upended the AI sector in late January, ostensibly by providing a functional LLM that was developed for a fraction of the cost of other major players like ChatGPT.

DeepSeek’s R1 model is also controversial due to its restrictions regarding sensitive topics in China, like the 1989 protests in Tiananmen Square. An anonymous security researcher has now been able to bypass this with carefully crafted prompt injections. The canny researcher worked out that this censorship likely wasn’t linked directly to the LLM itself but to an additional higher layer of sanitisation.

If so, it’s often possible to manipulate the input and output of text being processed by language learning models. Hackers have been able to ‘jailbreak’ ChatGPT in the past in this way by using non-English prompts.

In the case of the DeepThink-R1 model, the researcher discovered that using base 16 hexadecimal charcodes to chat with the LLM bypassed the filter entirely. He used this to discuss otherwise censored topics.